OmniVision’s OV5645 is a high performance, 5-megapixel system-on-chip (SOC) ideally suited for the cost-sensitive segment of the mobile handset market. The CameraChip™ sensor’s single MIPI port replaces both a bandwidth-limited DVP interface and a costly embedded JPEG compressor, allowing the new OV5645 sensor to save significant silicon area and cost. An embedded autofocus control with voice coil motor driver offers further cost savings for the end user, making the OV5645 a highly attractive alternative to other 5-megapixel sensors currently on the market.

The OV5645 also features a new picture-in-picture (PIP) architecture that offers an easy-to- implement, low-cost dual camera system solution for mobile handsets and smartphones. The feature is based on a master/slave configuration where a front-facing camera (OV7965) can be connected through the OV5645 master camera, enabling a two-camera system with PIP functionality without the need for an additional MIPI interface into the baseband processor.

Built on OmniVision’s 1.4-micron OmniBSI™+ pixel architecture, the OV5645 offers high performance 5-megapixel photography and 720p HD video at 60 frames per second (fps) and 1080p HD video at 30 fps with complete user control over formatting and output data transfer. The sensor’s 720p HD video is captured in full field-of-view with 2×2 binning, which doubles the sensitivity and improves the signal-to-noise ratio (SNR). A unique post-binning, re-sampling filter function removes zigzag artifacts around slant edges and minimizes spatial artifacts to deliver even sharper, crisper color images.

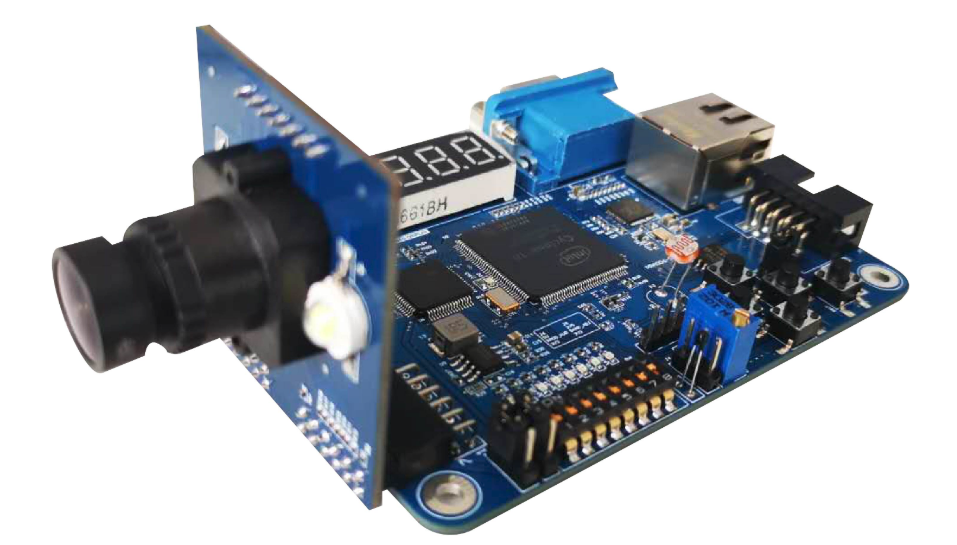

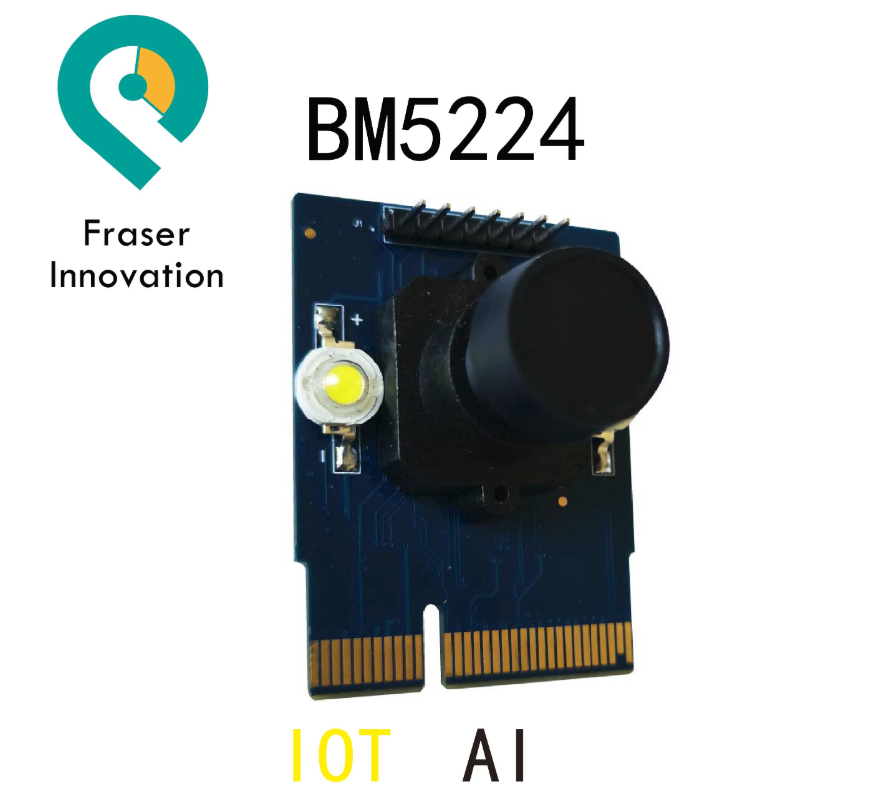

Fraser Innovation Inc develops BD5640 that contains a video camera based on video sensor OV5640 (CMOS). BD5640 support PMOD connector and it is compatible with different kinds of FII developing boards.

1.Introduction

The FII-BD5640-PMOD is a camera module designed to integrate the Omnivision ov5640 5 megapixel (MP) color image sensor , with its compatible power supply and oscillator. This board can be used with different kinds of FII FPGA development boards. Power supply for BD5640 is 3V3. The sensor includes lots of internal processing functions that can adjust white balance, saturation, hue, sharpness, and gamma correction.

The output data interface support general Digital output interface and dual-lane MIPI CSI-2 interface, so it can provides enough data bandwidth for common video streaming formats such as 1080p and 720p. The ov5640 (color) image sensor uses DVP data interface and SCCB control interface.

2.Basic Features

- 5MP color system-on-chip image sensor

- General digital output and Dual lane MIPI CSI-2 image sensor interface

- Supports QSXGA@15hz, 1080p@30Hz, 720p@60Hz,VGA@90Hz and QVGA@120Hz

- Output format include RAW10, RGB565, CCIR656, YUV422/420, YCbCr422, and JPEG compression

- M12 22mm lens mount with M12 3.6mm focus lens

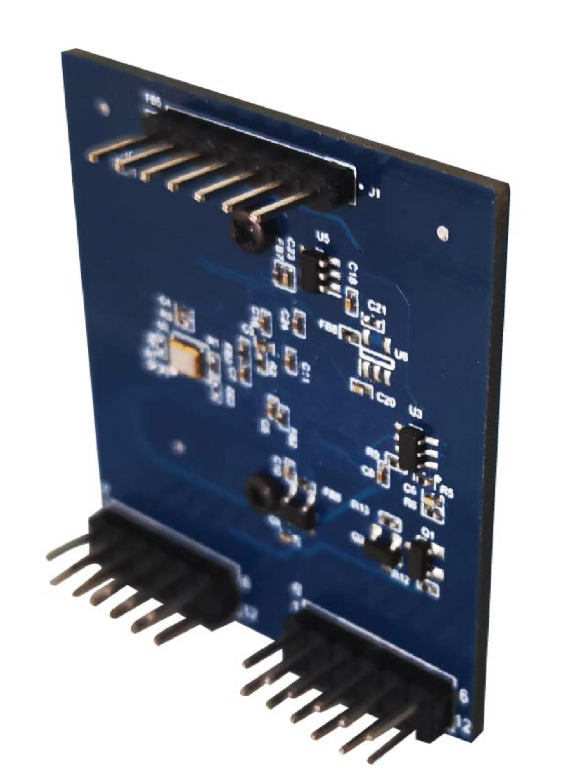

- Small PCB size for flexible designs (40mm*44mm)

- Powered up from double standard PMOD connector

- Supports FII-7030 and other FII FPGA development boards